IoT for fun and Prophet: Scaling IoT and predicting the future

Go from raw time-series data to actionable forecasts with just a few lines of code

I encounter many IoT customers in the wild, and it amazes me how often they’re fighting the same battles: device connectivity, messaging, collecting mountains of real-time data, wrangling large-scale ingestion, and (hopefully) gaining some meaningful analysis.

What’s more, there’s always pressure from the business to build an architecture that’s “just right.” One that can:

- Scale from zero to infinite devices

- Reduce costs, code, and operational headaches

- Retain control over your data

- Stay cloud-agnostic

- Predict the future

Redpanda and its ecosystem tackle these challenges in simple and economical ways. To prove it, I built a scalable IoT data pipeline for real-time streaming and forecasting—all with a light footprint. Let’s dive into the stack I used, then I’ll show you how all these parts fit together using a hands-on, real-world use case.

LIVE TECH TALK: To see this in action and ask me anything, join my tech talk on July 24th. Sign up here!

Unpacking the tech stack

Let’s check what’s in the toolbox:

Redpanda

Redpanda is an event-driven platform that simplifies how you build, operate, and govern streaming, AI, and agentic applications. It’s fully compatible with Apache Kafka® APIs, but without all the Kafka complexity. It also comes with 300+ pre-built connectors, and you can safely spin Redpanda up in your own VPC (with Redpanda BYOC).

For this project, I spun up a managed Redpanda cluster in AWS, which also handles the Redpanda Connect pipelines—these make ingesting data from AWS IoT super straightforward.

Apache Iceberg™

Apache Iceberg is the go-to for data lake storage. It’s fast, scalable, and actually makes querying big time-series datasets possible. It supports schema evolution (so you’re not locked into your first design) and plugs into various analytics tools like Databricks, DuckDB, and Apache Spark™.

AWS IoT

AWS IoT keeps device-to-cloud messaging reliable and secure. It scales nicely and works with lots of other AWS goodies (like Lambda and S3). Other options include HiveMQ or running Mosquitto yourself.

ESP32

The ESP32 is a low-cost, low-power system on a chip (SoC) with integrated Wi-Fi and Bluetooth, designed for IoT as well as embedded and connected devices. It features a dual-core processor and strong community support, making it popular for prototyping and production hardware projects. It’s an obvious choice for affordable, reliable IoT use cases. It costs around $4 (plus a cheap sensor), and you don’t have to fuss with an OS or endless updates. You’ll need to manage things yourself—like the real-time clock—but the simplicity is still hard to beat.

Prophet

Prophet is a Python library for time-series forecasting—super easy to use, handles trends/seasonality, and spits out predictions with a handful of lines. I used Prophet for this example, but in more complex real-world projects you might upgrade to something like Random Forest, XGBoost, or Temporal Fusion Transformers (TFT).

How they play together

Let's build the data pipeline from start to finish. The code (and working examples) are all in this GitHub repository.

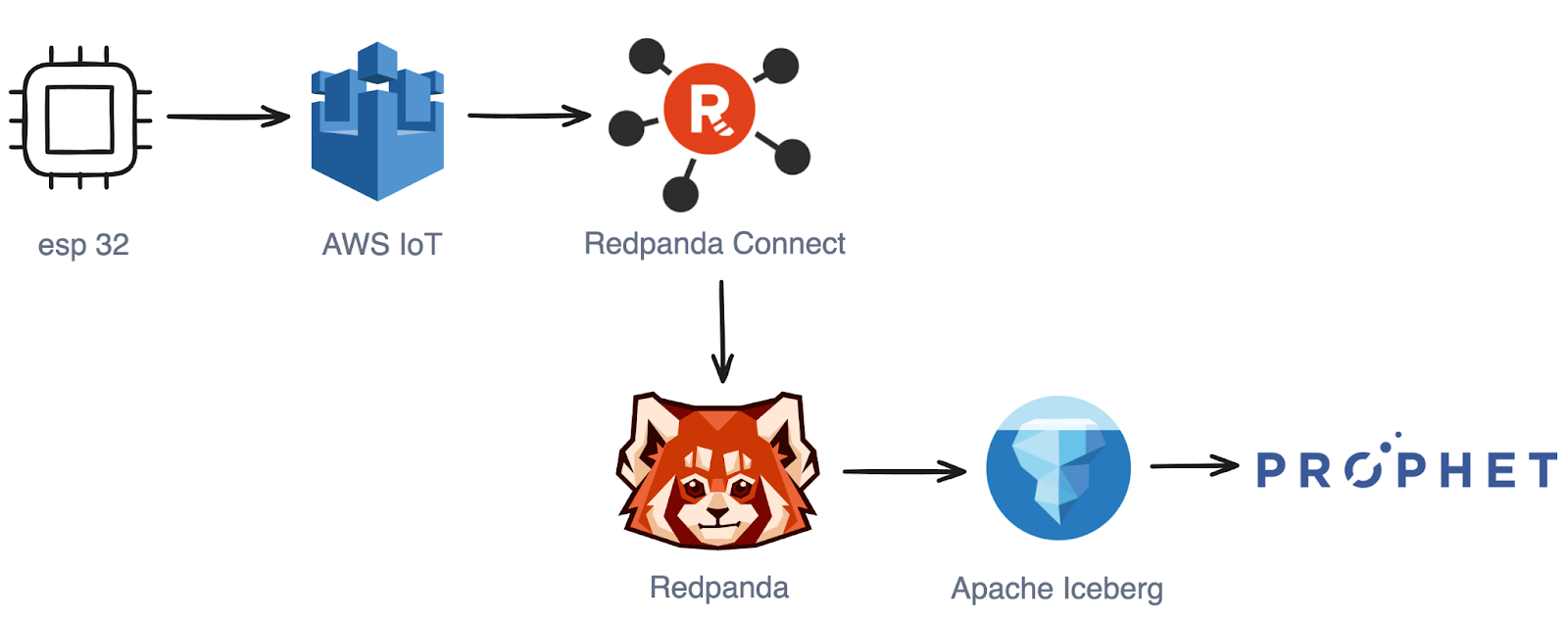

To start, here's how these technologies fit together in the use case of an IoT data pipeline that collects sensor data from the field and then sends it along for valuable analytics. This pipeline uses the ever-popular MQTT with the data lakehouse frontrunner, Iceberg. Redpanda easily ties everything together using its native connectors in Redpanda Connect.

Step 1: Use MQTT library to send sensor readings to AWS IoT

Using the Arduino MQTT client, after configuring the server and authentication, all you need is a topic and a message to send data to the AWS IoT service. ESP32 devices stream sensor data (temperature and humidity) securely to AWS IoT using MQTT.

In your device code, this is all you need to publish a message:

// ESP32 MQTT Example

mqttClient.publish("sensor/data", "{\"temperature\":22.5,\"humidity\":60}");Step 2: Use Redpanda Connect to deliver messages to Redpanda BYOC

Redpanda Connect can bridge the MQTT messages from AWS IoT into Redpanda topics for long-term storage and integration with other streaming solutions.

input:

mqtt:

urls: ["tcp://mqtt-broker:1883"]

topics: ["sensor/data"]

output:

kafka:

brokers: ["redpanda:9092"]

topic: "iot_sensor_data"Step 3: Write sensor data to Iceberg

Redpanda writes sensor data directly to Iceberg tables, while schema management is handled through Redpanda’s built-in schema registry. Here’s an example Avro schema:

{

"type": "record",

"name": "SensorData",

"fields": [

{"name": "sensor_id", "type": "string"},

{"name": "temperature", "type": "float"},

{"name": "humidity", "type": "float"},

{"name": "event_time", "type": "long", "logicalType": "timestamp-millis"}

]

}This schema is registered once, and Redpanda and Iceberg keep everything consistent for you. To configure the topic to export all of its data into an Iceberg table, simply run:

rpk topic alter iot_sensor_data --config redpanda.iceberg.mode=value_schema_id_prefixNote that the topic was already created by Redpanda Connect in the connect pipeline.

Step 4: Pull predictive analytics with Prophet

Now that the Iceberg table is automatically created, Prophet takes over for forecasting, letting you turn your pile of historical sensor data into actual predictions. Here’s the basic workflow:

import pandas as pd

from prophet import Prophet

df = pd.read_sql("SELECT event_time as ds, temperature as y FROM sensor_data", connection)

model = Prophet()

model.fit(df)

future = model.make_future_dataframe(periods=168, freq='H') # 7 days ahead

forecast = model.predict(future)Let’s unpack what’s happening here:

- Pull the data: You’re querying your sensor readings from Iceberg and sticking them in a pandas DataFrame. Prophet wants two columns:

ds(datestamp) andy(the value to forecast—temperature, here). - Model training: You build your Prophet model and fit it to your historical data. Prophet is pretty robust; if you’ve got missing points or your data is a little weird, it’ll generally handle it.

- Define the forecast window:

make_future_dataframeis where you decide how far ahead you want predictions. Here, 168 hours means a week’s worth of hourly forecasts. - Generate the forecast: Call

predict()and Prophet returns a DataFrame with your predictions, plus extra goodies like confidence intervals and trend/seasonality breakdowns if you want them.

The beauty: you go from raw time-series data to actionable forecasts with just a few lines of code. Swapping Prophet out for something fancier later is easy—the pipeline shape doesn’t change.

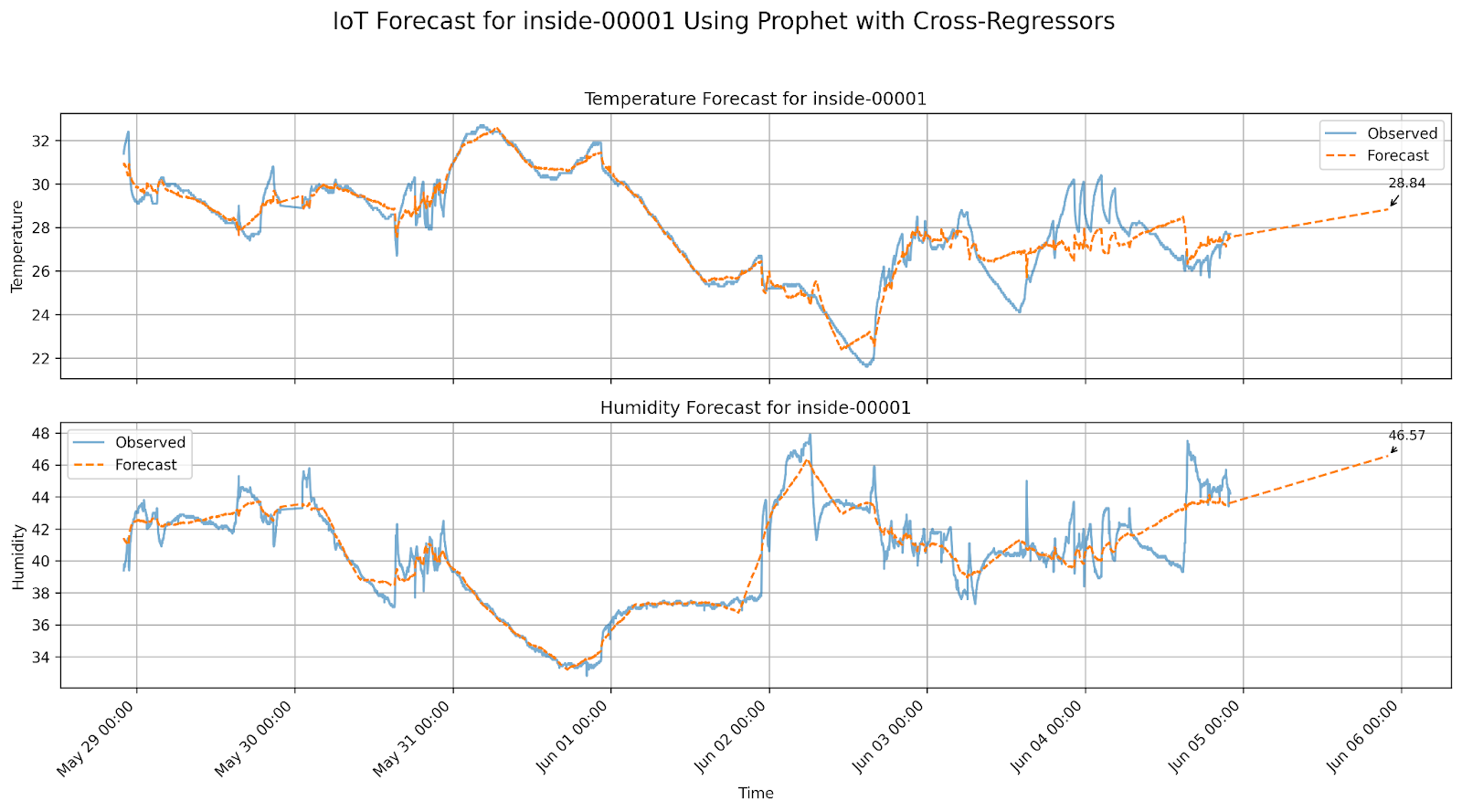

The results: real-time insight to predict the future

With this stack, you get a genuinely simple pipeline: real-time streaming, scalable storage, and forecasting—all with a light footprint. Scaling from a handful to thousands of devices doesn’t mean a dramatic spike in complexity or cost.

Fun fact: this is real data from my office. You can clearly see when I built and fired up a large server in the evening of June 2nd—the temperature and humidity went nuts. Predicting my office microclimate isn’t world-changing, but imagine the value for any business: less downtime, better resource use, and actual insight—not just hindsight.

A word to the wise

If you’re like most folks building in IoT, you’ll hit the same stumbling blocks: integrating low-power devices, scaling ingestion without a cloud bill that makes you wince, and keeping analytics approachable. What I like about this pattern is that it doesn’t demand you be a distributed systems wizard or a data engineer with a PhD. The Redpanda + Iceberg combo genuinely lowers the barrier.

A practical note: don’t underestimate how much easier your life will be with proper schema management from day one. Avro + Iceberg, with Redpanda’s Schema Registry, means you won’t be chasing weird “mystery column” bugs at 2 AM when you add new fields.

Also, while Prophet is fun for demos, real business apps will quickly demand features like anomaly detection, automated retraining, and integrating external signals (weather, operations, etc.). The good news is that the underlying data pipeline supports plugging in more advanced models without a total rewrite.

Wrapping up

So, that’s an applicable example of how you can glue together ESP32, AWS IoT, Redpanda Connect, Redpanda, Apache Iceberg, and Prophet for a reliable, scalable, and durable IoT data pipeline. Whether you’re tinkering at home or supporting thousands of sensors, this stack might just keep you out of trouble—and help you see problems coming before they hit.

The main takeaway is that modern data streaming doesn’t have to be complicated or expensive. With a few right-sized tools, you can focus more on experimenting and less on fighting infrastructure.

If you're interested in learning more and want to see this whole experiment in action, watch my IoT tech talk for a live walkthrough. Happy streaming!

Resources

Related articles

VIEW ALL POSTSLet's keep in touch

Subscribe and never miss another blog post, announcement, or community event. We hate spam and will never sell your contact information.

.png)