Building with Redpanda Connect: Bloblang and Claude plugin

A workshop on building and debugging real-world streaming pipelines

To optimize high-volume streaming data ingestion, you need to make smart architectural choices, select an effective technology stack, and undertake regular monitoring and maintenance activities. Your architecture should accommodate growing data volumes, have the capacity for rapid data ingestion, seamlessly integrate with multiple data sources, and have an efficient data ingestion layer. You also need real-time processing engines for quick insights, security measures to protect data, and cost-effective strategies to limit unnecessary overhead expenses.

Redpanda on GCP is an efficient platform for high-volume data ingestion that offers scalability, reliability, and availability. It easily scales horizontally to accommodate increasing data volumes and ensures data integrity through replication and failover technology during node failures. Redpanda maintains constant data availability through its distributed architecture to guarantee uninterrupted data ingestion and quick recovery. It can also seamlessly integrate with GCP tools like Cloud Functions, BigQuery, and Dataflow.

GCP offers different deployment options for high-volume streaming data, including Google Kubernetes Engine (GKE) and Google Compute Engine (GCE). GKE is a managed Kubernetes service that lets you deploy and manage containerized applications at scale using Google's infrastructure. GCE is a component of GCP that provides virtual machines for running applications on Google's infrastructure, giving you fine-grained control over cluster scalability.

High-volume streaming data is an ongoing flow of information generated at a rapid pace from various sources like sensors, devices, applications, and social media platforms. It is characterized by its high rate of data ingestion, high resource requirements, and difficulty in scaling. This data can reach petabytes in volumes daily, arrives quickly in real time or near-real time, flows continuously, comes in many formats, and may present unpredictable variance in volume, velocity, and data quality.

Streaming data plays a pivotal role in applications and industries where real-time information powers monitoring and analysis in high-stakes industries like finance, healthcare, manufacturing, e-commerce, and other data-driven businesses. Cloud platforms, especially Google Cloud Platform (GCP), excel at processing and analyzing vast, fast-growing data.

GCP's scalability, cost-effectiveness, managed services, global reach, integration capabilities, security, and user-friendly interface make it a preferred choice for businesses of all sizes.

This post explores architectural considerations for managing high-volume GCP data streaming. We'll explain deployment options — like Google Kubernetes Engine (GKE) and Google Compute Engine (GCE) — as well as storage solutions like Google Cloud Storage (GCS) and Local SSD.

To wrap it all up, we'll end with GCP's seamless integration with Redpanda and addresses data ingestion, network optimization, and storage strategies for high-volume streaming data.

High-volume streaming data refers to an ongoing flow of information generated at an intense pace from various sources, such as sensors, devices, applications, and social media platforms. It's distinguished by its high rate of data ingestion, high resource requirements, and difficulty in scaling.

These characteristics create unique challenges and opportunities in terms of data processing and analysis. For example, you may need to analyze user behavior on websites and applications that get millions of visits in a month in real time.

Here are the key characteristics of high-volume streaming data:

Optimizing high-volume streaming data ingestion involves making smart architectural choices, selecting an effective technology stack, and undertaking regular monitoring and maintenance activities. To get optimal results from streaming data ingestion, you also need to ensure that these strategies fit with your specific use case and data volume for maximum efficiency.

When developing an architecture for high-volume streaming data ingestion, you need to consider the following:

Given these considerations, Redpanda on GCP is an efficient platform for high-volume data ingestion that offers scalability, reliability, and availability. Google manages the Redpanda cluster, which means you can focus on app development.

The platform easily scales horizontally to accommodate increasing data volumes and ensures data integrity through replication and failover technology during node failures. Redpanda also maintains constant data availability through its distributed architecture to guarantee uninterrupted data ingestion and quick recovery.

The best part? Redpanda can seamlessly integrate with GCP tools like Cloud Functions, BigQuery, and Dataflow. For example, you can use HTTP to trigger Cloud Functions and directly stream and batch data into BigQuery tables.

GCP offers different deployment options that can be used in various ways for high-volume streaming data. Google Kubernetes Engine (GKE) and Google Compute Engine (GCE) are two of the most popular options.

GKE is a managed Kubernetes service that lets you deploy and manage containerized applications at scale using Google's infrastructure. You can easily scale your deployment by adding nodes or pods as necessary, and horizontal scaling ensures you can handle high data volumes. High availability is provided across multiple nodes and zones, so if one node or zone goes offline, Kubernetes automatically reschedules pods to maintain service continuity.

Google Compute Engine is a component of GCP that provides virtual machines (VMs) for running applications on Google's infrastructure. GCE gives you fine-grained control over cluster scalability by letting you manually add or remove VM instances as necessary. Users can choose from a variety of predefined machine types or customize their VMs based on specific requirements, such as CPU, memory, and storage.

GCP offers numerous storage solutions designed specifically to handle high-volume streaming systems efficiently in terms of data ingestion, storage, and processing.

GCS is a scalable object storage service that accommodates large volumes of unstructured data, including log files, images, videos, and backups. It's a reliable and highly available storage option, which is ideal for streaming systems that need to store raw data ingested via their APIs.

Local SSDs are temporary block storage volumes attached to Compute Engine virtual machines that offer very high performance but may only last for a short time. Local SSDs are an ideal solution for streaming data that needs to be accessed quickly but will only remain there for a short time.

As streaming data volumes continue to grow, you'll need to implement effective network optimization techniques to maintain system performance and prevent bottlenecks. The following are some effective network optimization strategies for high-volume streaming systems.

Load balancers distribute incoming traffic across multiple servers, which can help improve performance and reduce latency. For high-volume streaming systems, you should use regional or global load balancers to ensure that traffic is distributed evenly across multiple regions or data centers. This can help to prevent bottlenecks and ensure that users have a good experience regardless of their location.

Compressing data can significantly reduce the amount of bandwidth required to transmit it over the network. This can be especially important for high-volume streaming systems. There are a variety of different compression algorithms, like gzip, that you could use for this purpose.

A content delivery network (CDN) is a network of servers that are distributed around the world. When a user requests a static file, such as an image or a video, the CDN will try to serve it from a server that is as close to the user as possible. This can help reduce latency and improve the user experience.

Network monitoring tools can identify and troubleshoot network problems, such as congestion and packet loss. This can prevent outages and ensure that your high-volume streaming system is performing at its best.

Virtual private cloud (VPC) peering allows direct communication between different VPCs within the same or different regions.

For high-volume streaming systems with distributed components across multiple VPCs, establishing VPC peering connections facilitates efficient and secure data exchange. This strategy streamlines communication, reduces latency, and enhances the overall connectivity and collaboration between different segments of the infrastructure.

VPC is a critical component of network optimization strategies for high-volume streaming data. It provides a secure and isolated environment for deploying and managing high-volume streaming data applications and infrastructure.

By using VPC features, organizations can achieve enhanced security, customizable network configuration, scalability, and more. VPC and shared VPC are networking options within GCP that allow you to set up and control isolated network environments for your resources.

VPC provides network isolation, which enables you to establish a dedicated environment for high-volume streaming data systems. Furthermore, with VPC, you have complete control over network architecture, such as IP address ranges, routing rules, and firewall rules. However, configuring and managing VPC is complex.

A shared VPC allows multiple projects to share a common VPC network — simplifying network management as resources and networking settings can be centralized. Furthermore, a shared VPC allows for consistent network policies and firewall rules across projects to provide uniform security configurations. Heads up: setting up a shared VPC can be complicated, and configuration mistakes or providing unintended resource access can result in mismanagement.

Storing historical streaming data requires specialized solutions for handling high volume, velocity, and timeliness while ensuring low latency access and cost-effectiveness. Strategies include the following:

Redpanda's Tiered Storage also provides a practical and cost-effective solution for storing historical streaming data. It can seamlessly integrate with Google Cloud Storage and offers the following ways to optimize historical streaming data storage:

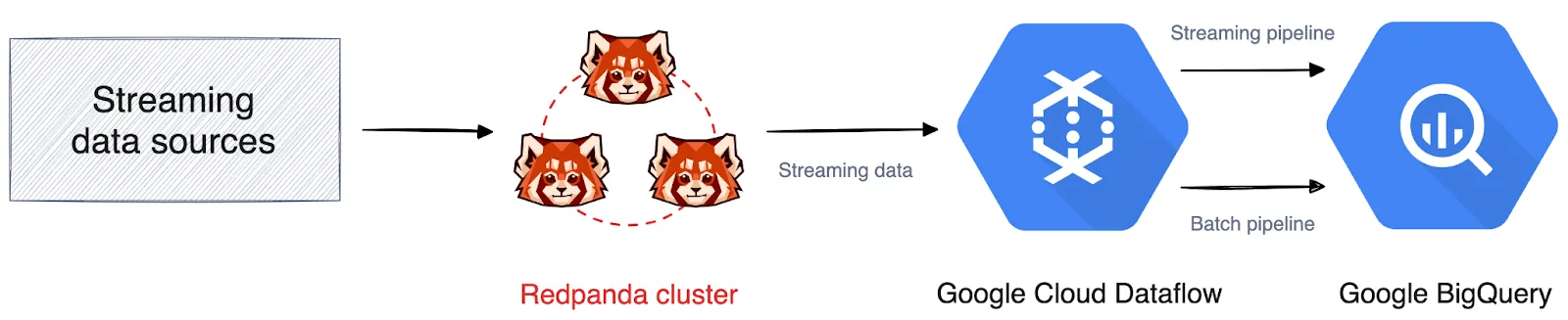

Integrating Redpanda and GCP supports many of the strategies outlined above. To get an idea of how it works, here's a diagram that illustrates high-volume streaming data with Redpanda and GCP technologies:

Architecture diagram for high-volume streaming data with Redpanda and GCP technologies

Redpanda handles the data ingestion and receives all the streaming data that needs to be processed. It then sends the data to Google Cloud Dataflow where it's processed and transformed in real time. The processed and transformed data is then loaded into Google BigQuery for storage and analysis.

This architecture ensures scalable and reliable streaming data ingestion, real-time processing, and storage in Google Cloud. If you're curious, we wrote a tutorial on how to stream data from Redpanda to BigQuery for advanced analytics.

Handling high-volume streaming data on GCP requires careful architectural consideration to ensure scalability, reliability, and real-time data processing. GCP offers many services and tools to meet this demand, but selecting the appropriate combination is crucial. In this context, Redpanda is a powerful high-throughput, low-latency stream processing platform that can seamlessly integrate with GCP.

To learn more about running Redpanda on GCP, browse our documentation and check out our Google BigQuery sink connector. Questions? Ask the Redpanda Community on Slack!

Subscribe to our VIP (very important panda) mailing list to pounce on the latest blogs, surprise announcements, and community events!

Opt out anytime.