Real-time AI: what is it and why it needs streaming data

How streaming data takes your AI from reactive responses to proactive problem-solving

AI this, AI that — it’s everywhere and it’s inevitable. But between the eye rolls and the occasional, “who even needs this,” there are certain realms of AI that merit exploring. Real-time AI (or “proactive intelligence”) is one of them.

Real-time AI is how autonomous vehicles make split-second navigation and safety decisions, and how fraudulent transactions get flagged in milliseconds. It’s also how emergency rooms can instantly prioritize critical cases as data comes in.

So if you’re building streaming data applications, you’ll want to learn about real-time AI before everyone else gets too far ahead.

The topic is so important that we even published an O’Reilly report on streaming data for real-time AI that you can download and read at your leisure. It’s packed with helpful info, and you’ll feel smarter just having it on your desktop (isn’t that what PDFs are really for?).

To help you understand why you’d even want to read it, this post gives you a taster of what’s inside. (Even if you don’t want the report, you’ll still learn a whole lot about real-time AI. Either way, you win.)

Let’s get into it.

What is real-time AI?

The gap between insight and action is shrinking to milliseconds, and organizations that can't keep up are losing ground to those that can.

That’s the fundamental truth of the report. In brief, traditional AI relies on batch processing — collect data, store it, and analyze it hours or days later. But by the time batch-processed insights arrive, the ideal moment to take action has gone. Markets have moved on, threats have evolved, and golden opportunities have disappeared.

Real-time AI is an advanced breed of AI systems that can process data, make decisions, and generate outputs instantly (or with extremely low latency) as events happen, rather than after a delay.

It isn't just about processing data faster. It's about creating systems that perceive, interpret, and act as events unfold. Think of real-time AI as a super smart intern — or a wildly productive employee who doesn’t argue with the project lead. How neat is that?

Why streaming is best for real-time AI

The report covers three core messaging patterns that enable real-time systems — point-to-point (queue), publish-subscribe (pub/sub), and event streaming.

Real-time processing can enhance all those patterns to deliver smarter outputs, but for real-time AI, you want to use event streaming. This is because event streaming is designed for high-volume, continuous data processing where events are persistently logged into a stream, allowing multiple consumers to read and process events in order.

Essentially, event streaming provides persistent, replayable logs of everything that has happened so AI models can learn from historical patterns while responding to current events.

Think of a live customer service chat where the AI responds in real time using internal company content and the customer’s current inquiry, or gives the human agent immediate, context-aware assistance based on prior successful conversations for similar inquiries. Human agents can also replay the entire conversation later for training purposes, since it’s all conveniently logged.

A few popular technologies that enable event streaming for real-time AI include Redpanda, Apache Kafka®, Apache Pulsar, Amazon Kinesis, and Azure Event Hubs.

So, how do you put together a scalable architecture for real-time AI? Well, to cite the report,

"Building a scalable, real-time AI system is not a single architectural choice; rather, it comprises layered optimizations that compound. Each one of these categories is a lever, and there are trade-offs between latency, persistence, computational complexity, and scalability that are deeply interconnected."

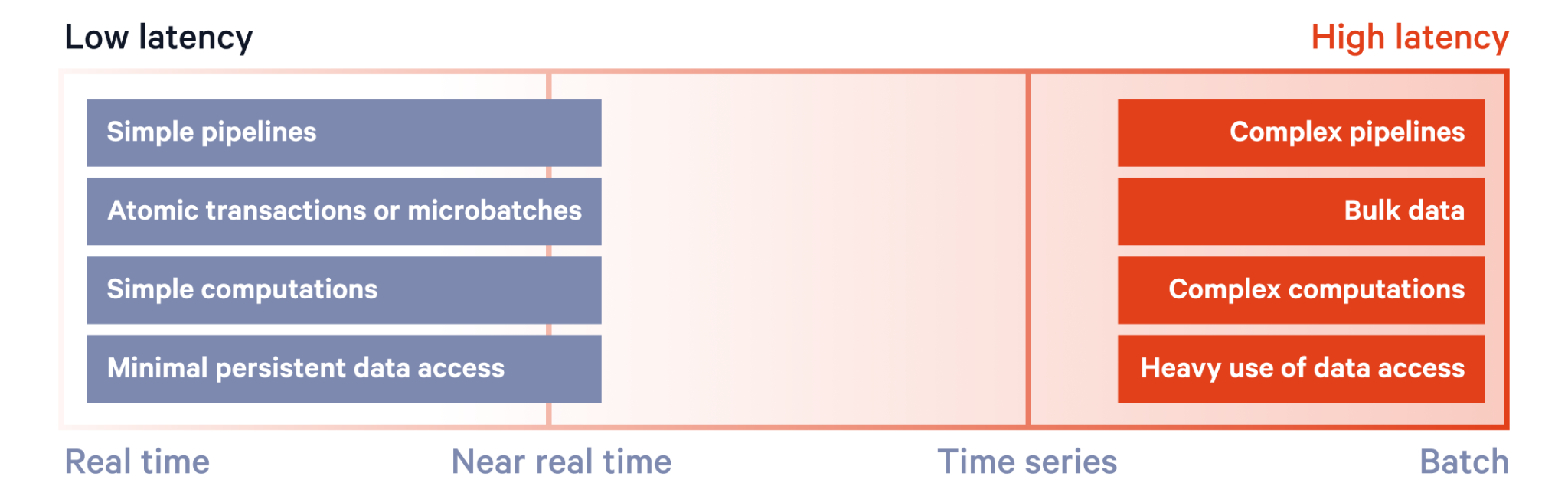

For example, in the delicate case of latency — the time between data generation and consumption — it depends on factors like pipeline complexity, data volume, and computational load.

Simply put, the more complex the pipeline, the higher the latency. (This is why AI-first companies, like poolside and Deepomatic, are choosing Redpanda to simplify their pipelines and keep latency ultra low.)

To really dig into the trade-offs in each layer and learn which architectural choices suit your use case, go ahead and download the full report.

{{featured-resource}}

Where to run real-time AI

Aside from architectural choices and their implementations, the report also covers a rarely-discussed side of AI: where to run it.

Running AI is all about location, location, location. All things being equal, the closer the AI processing happens to the data source, the lower the latency. This is because remote processing can introduce delays due to network communication and data transfer times. Also, the more intermediators something has to pass through, the more latency it experiences (like speed bumps on the road).

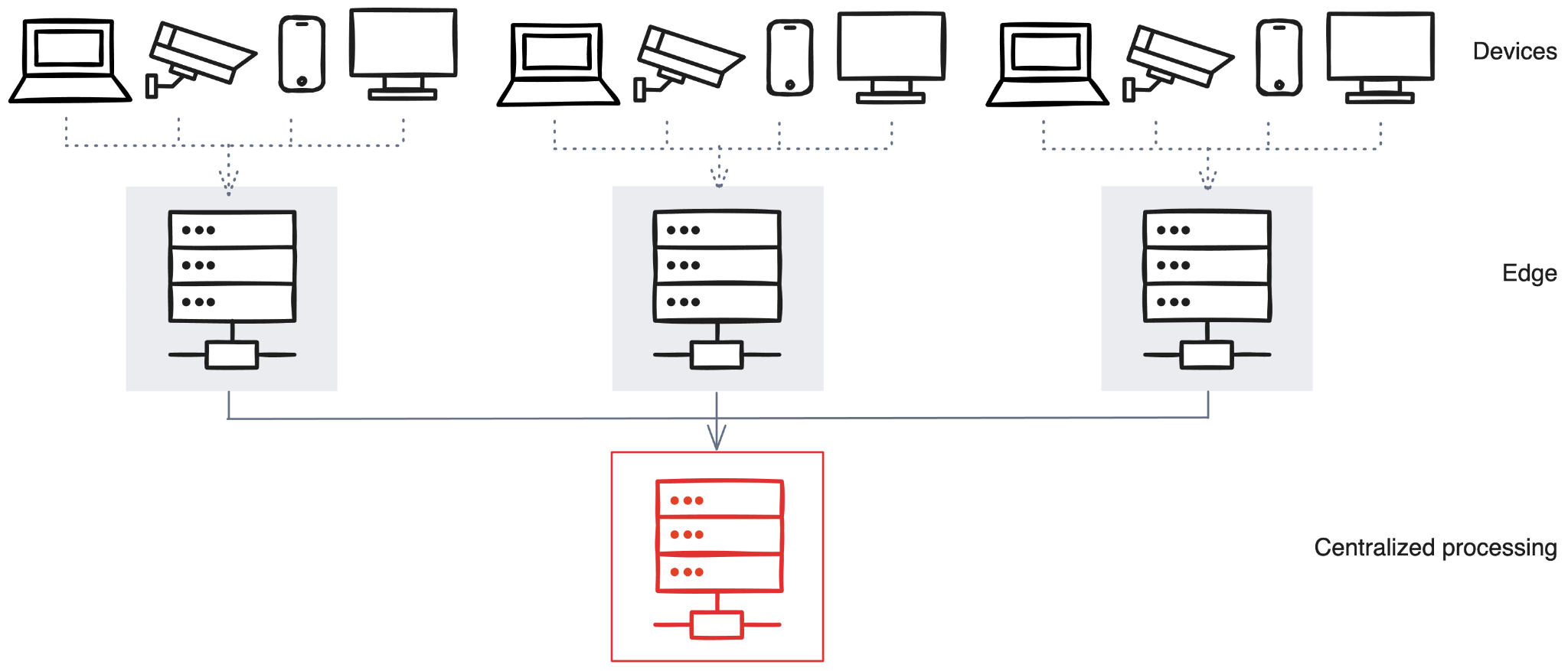

Typically, AI will run in one of three different locations: device, edge, and centralized.

Here’s a quick introduction:

- On-device AI: Processes directly on hardware (like drones) gives you zero network latency but limited processing power.

- Edge AI: Processes on nearby infrastructure (routers, gateways, or edge appliances) to reduce latency while maintaining more computational power.

- Centralized AI: Cloud-based AI offers virtually unlimited computational resources, but at the cost of increased latency.

You can also opt for a hybrid approach to get the best of all worlds. As a real-world examples, the New York Stock Exchange (NYSE) handles over one trillion records daily with sub-100ms latency by strategically combining edge and centralized processing.

Top industries using real-time AI

Implementing real-time AI in trading can significantly enhance decision making, profitability, and risk management by reducing latency in trade execution.

The financial sector exemplifies the ultimate latency-sensitive application, but there are plenty more industries already embracing real-time AI. The report focuses on the five most popular domains:

- Financial services: Processes millions of market events per second for algorithmic trading

- Cybersecurity: Detects threats as they emerge, not hours later

- AdTech: Makes bidding decisions within 100-millisecond timeouts

- Manufacturing: Prevents failures before they cascade into downtime

- Gaming: Dynamically adjusts live experiences to keep players engaged

In each of these, real-time AI shortens the time between insight and action, which in turn compresses the time between opportunity and impact. (And that can only mean good things for their bottom line.)

Just imagine what it could achieve in your field?

What’s next for real-time AI (and how to get ahead)

Real-time is already leaving a golden footprint in some of the world’s biggest businesses, but how’s the next frontier of applications shaping up? Well, the report highlights two areas in particular:

- Agentic AI: This is AI that can autonomously plan, make decisions, and act without human input. Less reactive responses, more proactive problem-solving.

- RAG: Retrieval-Augmented Generation (RAG) is where an AI model retrieves relevant information (like from a database or documents) and then uses that context to generate accurate responses based on real data. (No more “AI hallucinations.”)

With the possibilities of AI swiftly transitioning from unimaginable to obvious, it’s wise to learn everything you can to meet AI where it’s at now and help shape the future of where it’ll go next.

Remember, this post was just a taster. If you really want to dig into the concepts, architectures, and implementation strategies, go ahead and download the free ebook! (Last prompt, promise.)

You can also check out the resources below to keep the momentum going. Go forth and learn more.

Handy links

How to build real-time AI the easy way

[Blog] Top AI agent use cases across industries

[Blog] What is agentic AI? An introduction to autonomous agents

[Docs] Retrieval-Augmented Generation (RAG) | Cookbooks

[YouTube] Bringing RAG to device with Redpanda Connect using LLama

[Infographic] Why event-driven data is the missing link for agentic AI | Redpanda

[On-demand] Intro to Agentic AI | Tech Talk on building private enterprise AI agents

Have questions? Ask us in the Redpanda Community on Slack.

Related articles

VIEW ALL POSTSLet's keep in touch

Subscribe and never miss another blog post, announcement, or community event. We hate spam and will never sell your contact information.

.png)